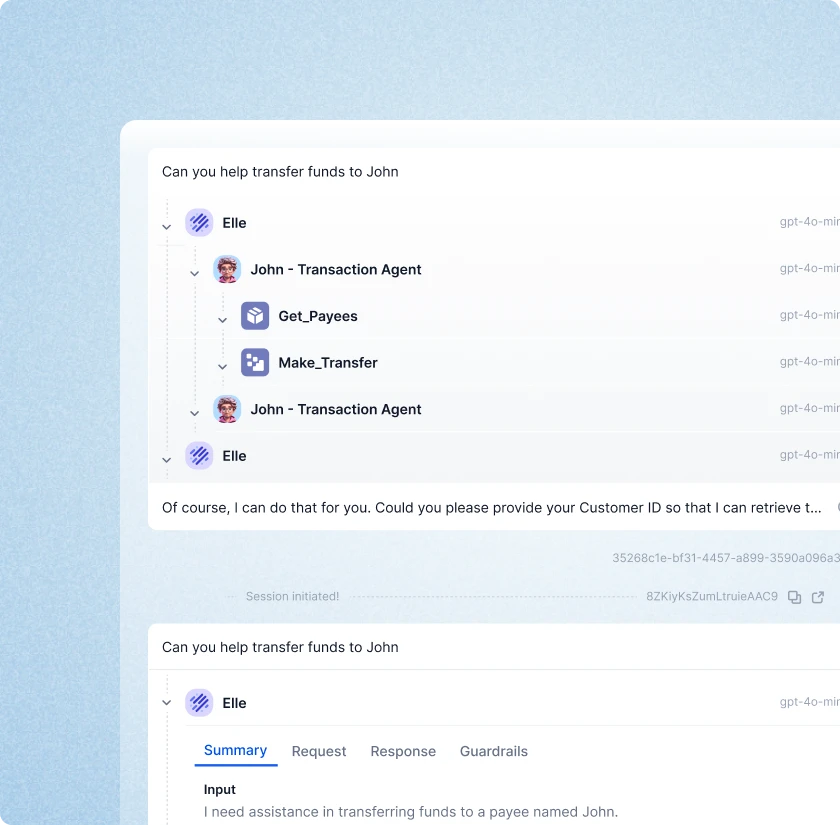

Agent tracing

Track every action your AI agent takes across the entire workflow like tools used, AI models selected, latency, and token usage for complete visibility and faster debugging.

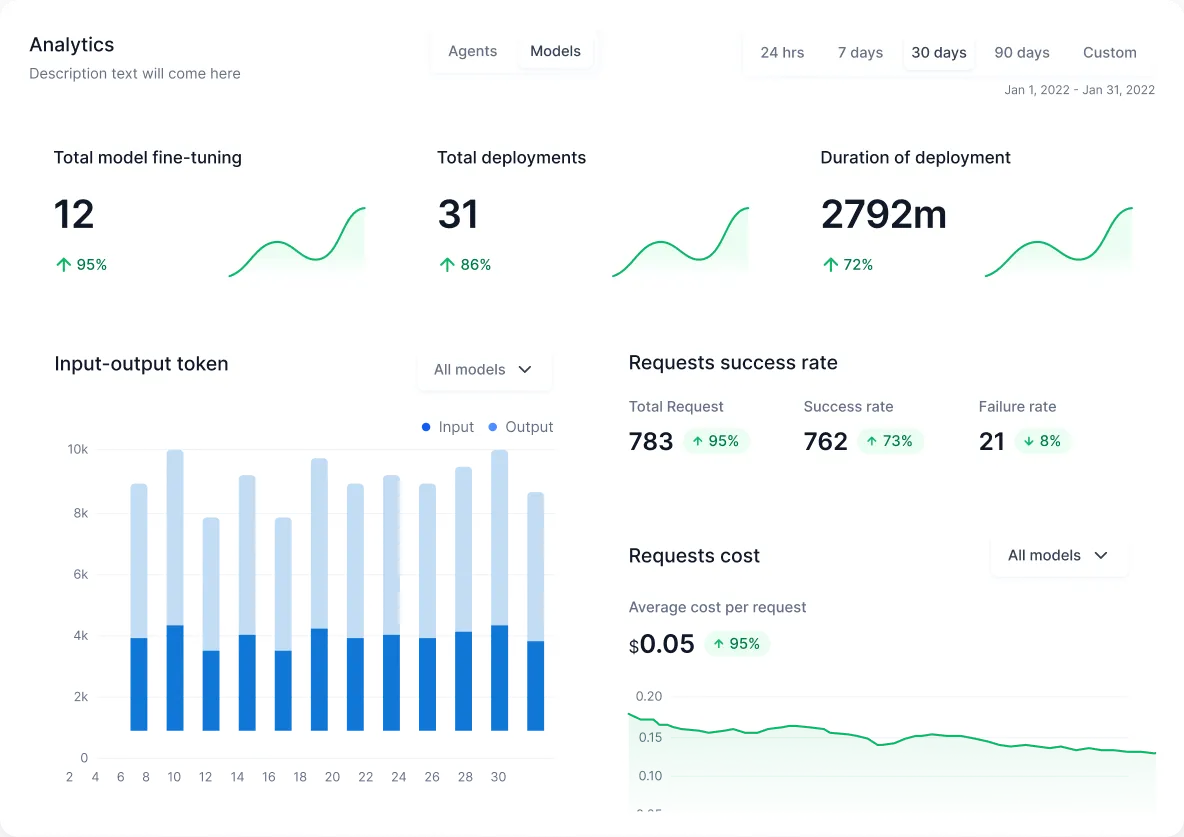

Insights + analytics

Monitor and optimize AI agent performance with comprehensive analytics that track latency, workflow success, and operational efficiency.

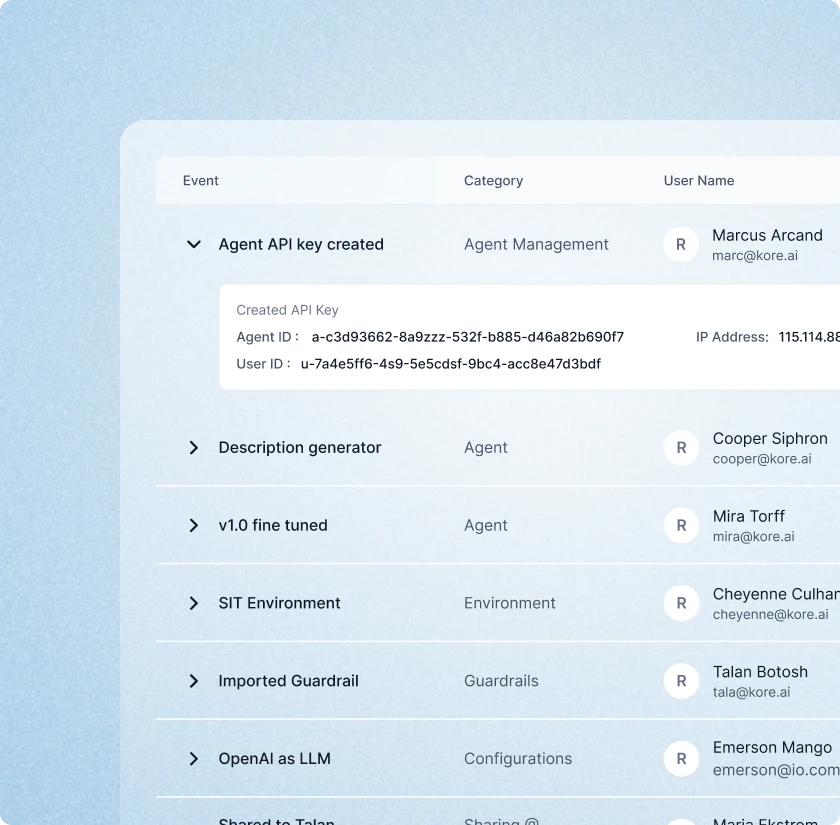

Monitoring events

Track AI agent activity and system changes with detailed records of system events, user actions, and configuration or model changes.

.webp)

.webp)